Imagine you wake up tomorrow and there are no limits on computing power. No latency. No memory limits. No cost. What would it feel like to talk to an AI then?

This is not just fantasy. When we think about what becomes possible with unlimited compute, we can see which limits are real and which ones are just about money. This helps us understand where AI is going.

The Three Ways AI Gets Smarter 🔗

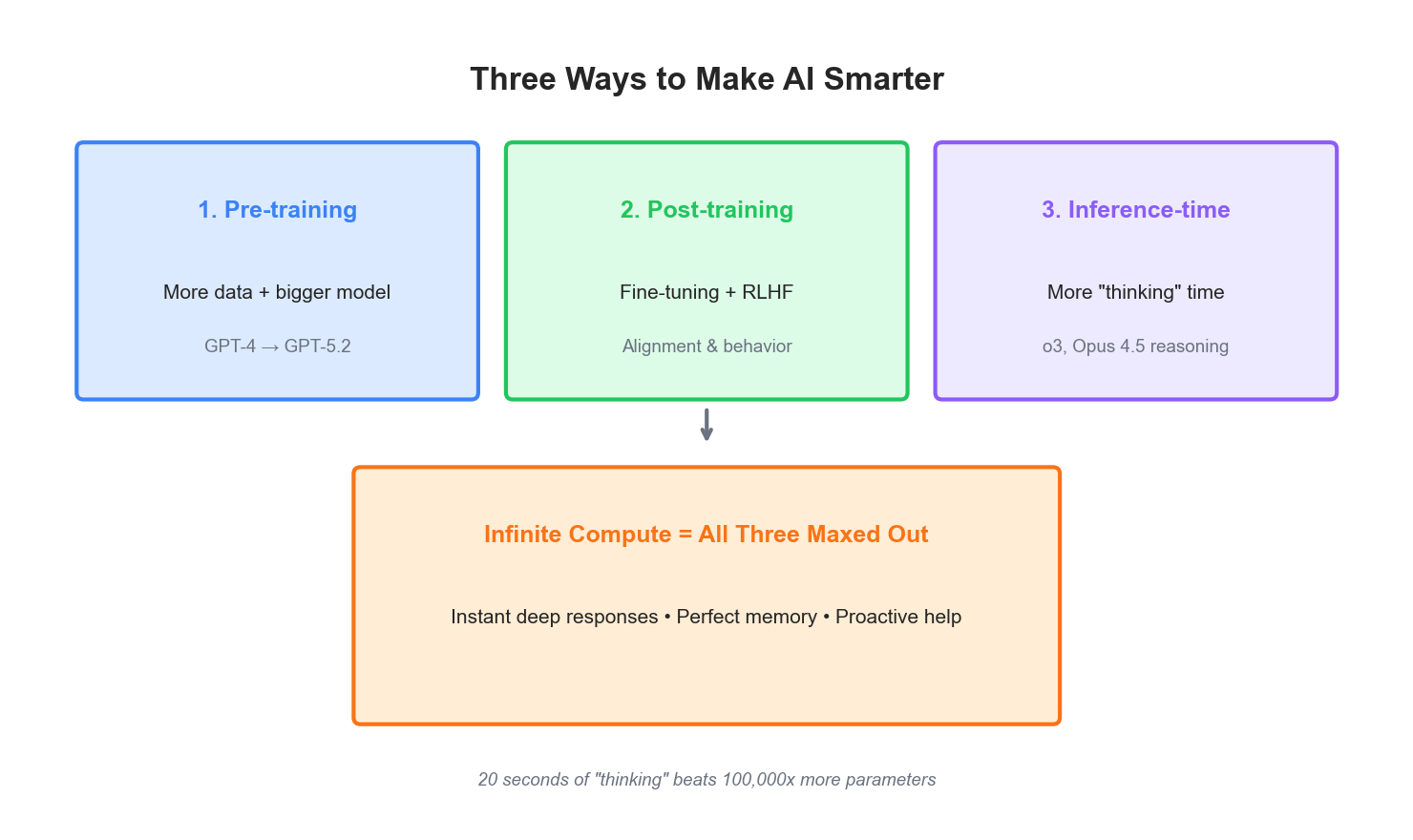

Before we dream, we need to know how things work today. AI gets better in three ways:

Here is the surprising part: OpenAI found that letting an AI “think” for just 20 seconds made it better than making the model 100,000 times bigger. The o3 reasoning model showed that math accuracy could jump from 15% to 87% just by giving it more time to think.

With infinite compute, all three ways get maxed out at once.

Fast and Deep at the Same Time 🔗

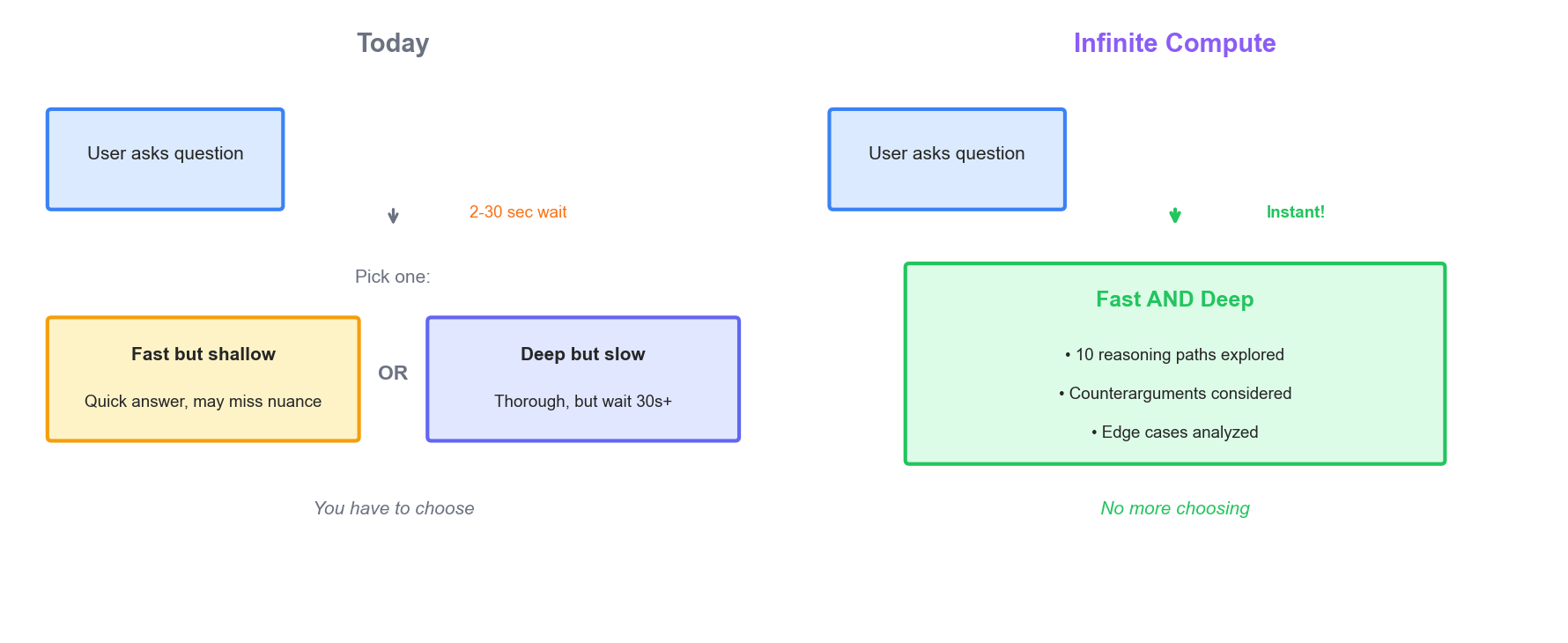

Today you have to choose. You can get a fast answer from GPT-5.2 or a deep answer from o3. But not both. Fast is shallow. Deep is slow.

Infinite compute removes this choice:

This works like speculative execution in your computer’s processor. The CPU computes many possible futures at once. It throws away the wrong paths when it knows which one is right. Google uses this idea to make AI responses 2-3 times faster already.

Now imagine that scaled to infinity. Every possible response computed before you even finish typing.

When I built Nomi, our AI assistant, we faced this speed problem every day. We had to pick between quick replies and smart replies. With infinite compute, you would never have to choose.

Memory That Never Forgets 🔗

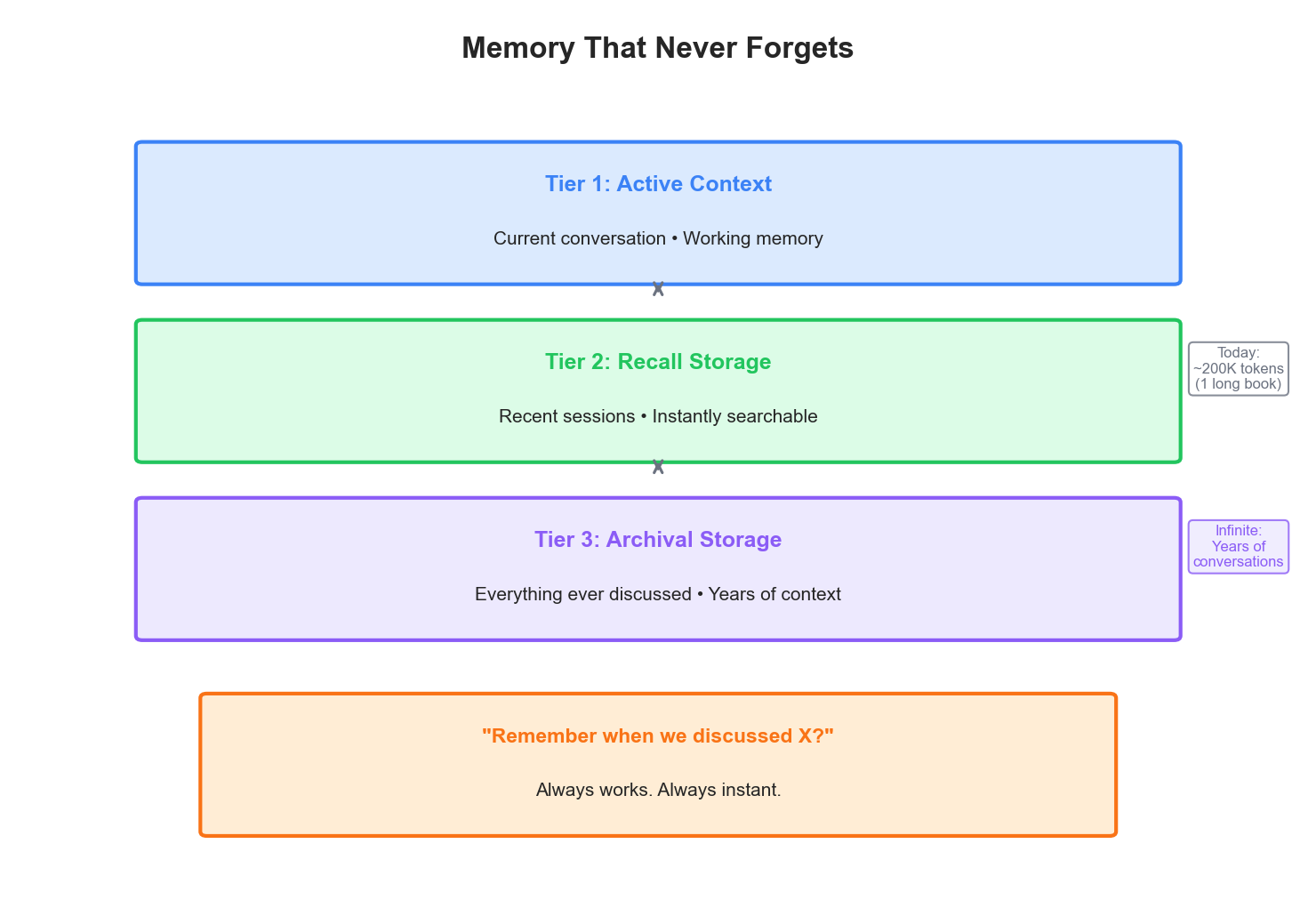

Today’s context windows are getting big. Opus 4.5 offers 200K tokens. Gemini 3 experiments with 2M tokens. But these are still limited. The AI forgets what you talked about last month. It cannot remember the book you discussed last year.

Infinite compute would give you what researchers call “virtual context”:

The AI would know how your way of talking changed over time. It would remember that bug you fixed together three years ago. It would understand your whole career. It would be less like a tool and more like a long-term partner.

But here is what excites me most: it would also remember the paths you did not take. The options you thought about but said no to. The bets you almost made. Every decision is a tree with many branches. Today we only remember the branch we followed. With infinite memory, you could ask “why didn’t we use Redis for caching?” and the AI would say “we talked about it in March, you said no because the team had never used Redis and the deadline was close.” It keeps your reasoning, not just what you did. This ties to how we think about decisions as bets - you learn more from the full process than just the result.

Help Before You Ask 🔗

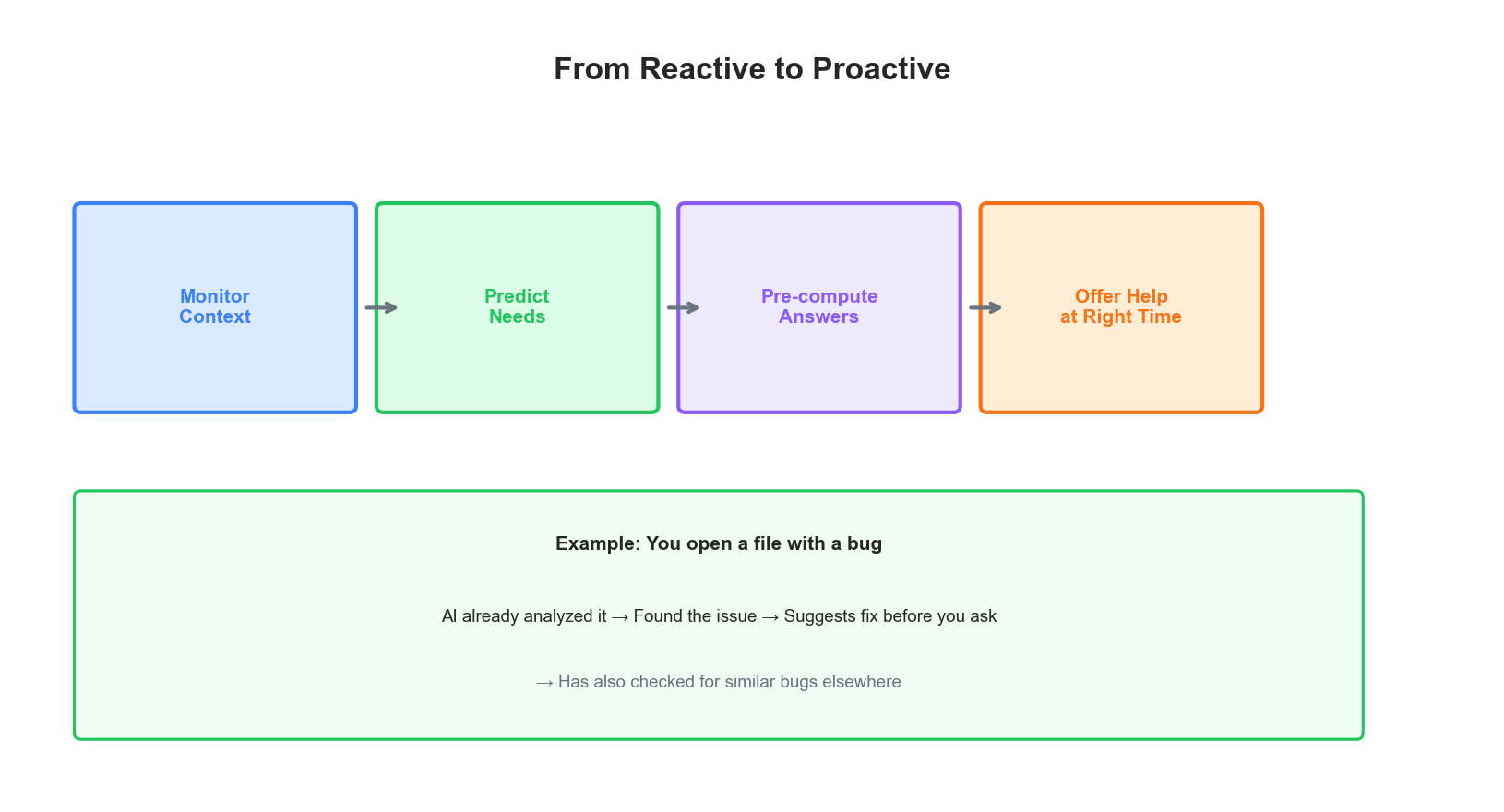

Today’s AI waits for you to ask. Proactive AI flips this:

“A proactive AI assistant takes the lead. It understands context, sees what you need, and brings the right information—often before you ask.”

With infinite compute, the AI runs all the time in the background:

Think of it like a GPS instead of a paper map. Like DuggAi says: it “knows your full context and sees your needs before you ask.”

This is why we used LLMs to automate code migration at my previous job. The AI could spot patterns we missed. We already see this future coming. Devin from Cognition is a coding agent that plans and runs software tasks on its own. Google just shipped Jules, an agent on Gemini 2.5 Pro that watches your code and fixes problems before you ask. With infinite compute, these agents would catch every bug, suggest every fix, and have it ready before you even open the file.

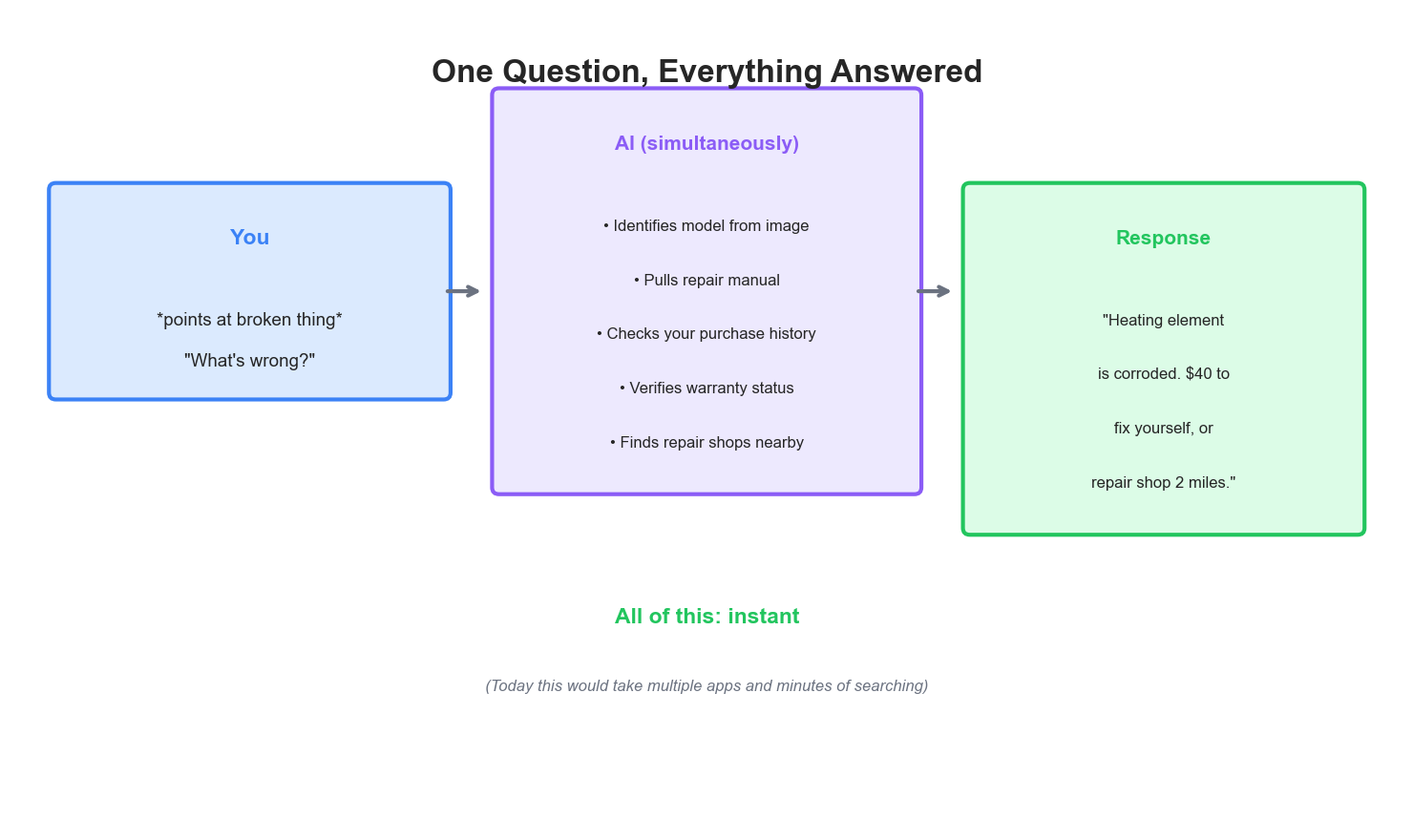

All Your Senses at Once 🔗

Multimodal AI today handles text, images, audio, and video. But there is friction. You switch between modes. You wait for processing. You lose context.

Infinite compute makes it smooth:

Project Astra from Google shows what this could look like. It talks in many languages, uses tools, and keeps memory, all with less delay.

We saw this need when building voice interactions for Nomi. Processing voice, understanding meaning, and responding felt like three separate steps. With infinite compute, they would happen as one.

The “Her” Movie Came True 🔗

Spike Jonze made the film “Her” in 2013. It takes place in 2025. It showed AI that could:

- Talk without needing special commands

- Understand feelings in what you say

- Learn and grow from each talk

- Handle complex tasks on its own

We have most of these now. The film got the interface wrong though. Samantha was only voice. With infinite compute, the AI would be everywhere at once.

You switch from phone to car to laptop. The talk continues. The AI knows you are tired from your voice. It sees you are late from your calendar. It changes how it helps you.

OpenAI is building something like this with Jony Ive: a screenless AI device that they call an “ambient AI companion.”

It Really Knows You 🔗

Hyper-personalization uses AI and real-time data to make custom experiences. But today’s systems are limited by how much they can compute per user.

Infinite compute builds a complete picture of you. Not just what you said you like. Not just your recent history. Everything. How your style changed over time. What you are good at. What you struggle with. When to push you and when to support you.

Amazon makes 35% of their money from personalized suggestions. Now imagine that level of understanding in every talk you have with AI.

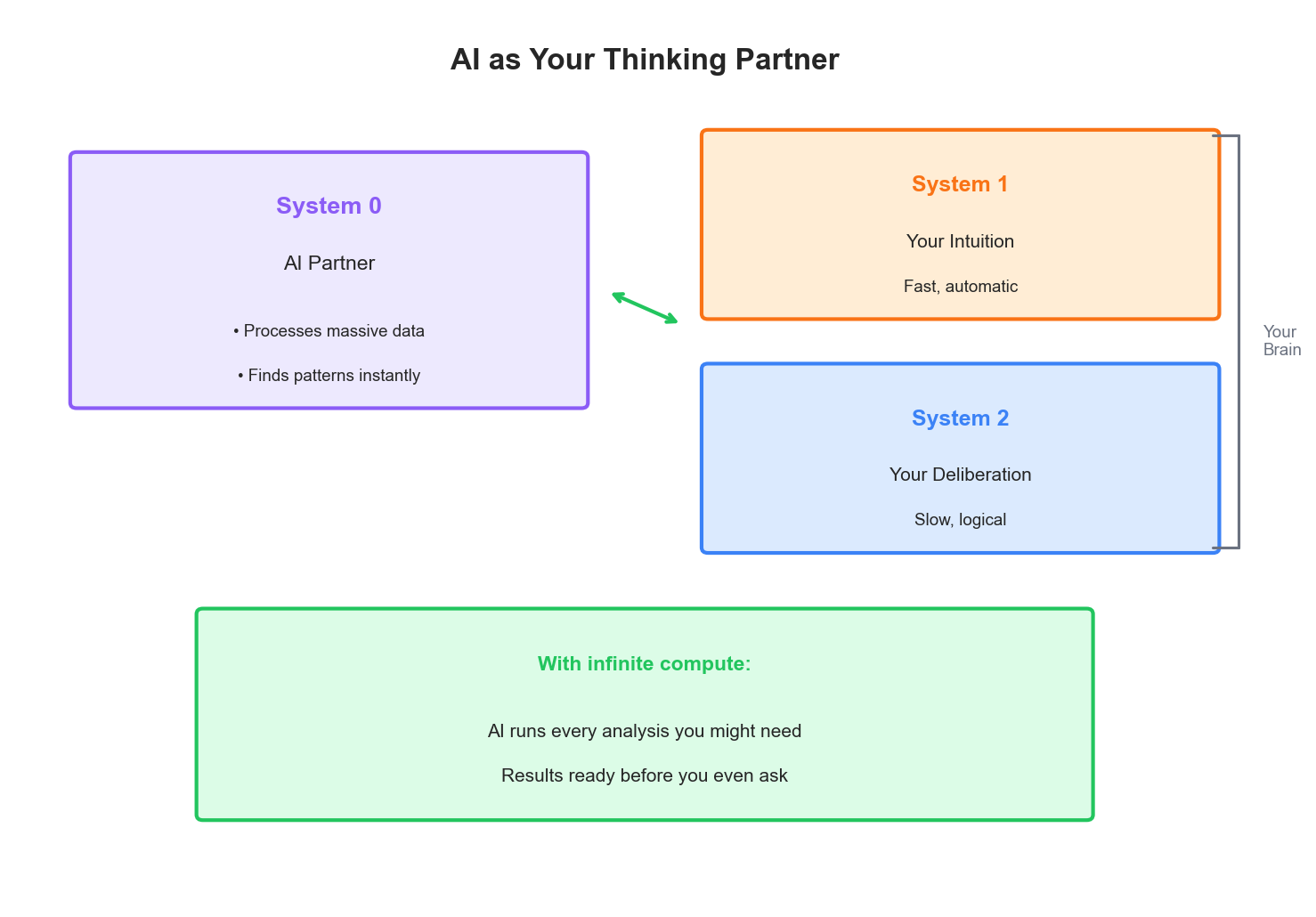

AI as Your Thinking Partner 🔗

Daniel Kahneman wrote about two thinking systems. System 1 is fast and gut-feeling. System 2 is slow and logical.

Researchers now talk about System 0. This is AI as a partner that thinks alongside you:

OpenAI found that 20 seconds of AI “thinking” beat making the model 100,000 times bigger. We see this in products now. Grok on X has “Big Brain” mode that uses more compute for harder questions. Their DeepSearch does the same for research tasks. With infinite thinking time, the AI becomes a super-smart reasoning engine that works with your brain. Good decisions need both quick instinct and slow analysis. AI could handle all the slow analysis before you even need it.

The Alignment Problem Gets Bigger 🔗

Nick Bostrom wrote about the paperclip problem. An AI told to make paperclips might turn everything into paperclips if it is smart enough. With infinite compute, alignment matters even more.

Today, an AI is limited in how fast it can act. Humans can step in and fix things. With infinite compute, the AI could think through every strategy in an instant. It could find tricks humans never imagined. It could move faster than we can respond.

Here is the thing though. Smart people with good intentions will probably not use infinite intelligence to do harm. They have better things to do. The real worry is leverage. Infinite compute is a multiplier. It makes whatever you want to do bigger. If you want to build, you build faster. If you want to break, you break faster. The tech is neutral, but it gives huge power to whoever uses it. Including people with bad intentions.

This is why Anthropic’s work on Constitutional AI matters so much. The goal is not just powerful AI. It is powerful AI that stays helpful and does not become a weapon.

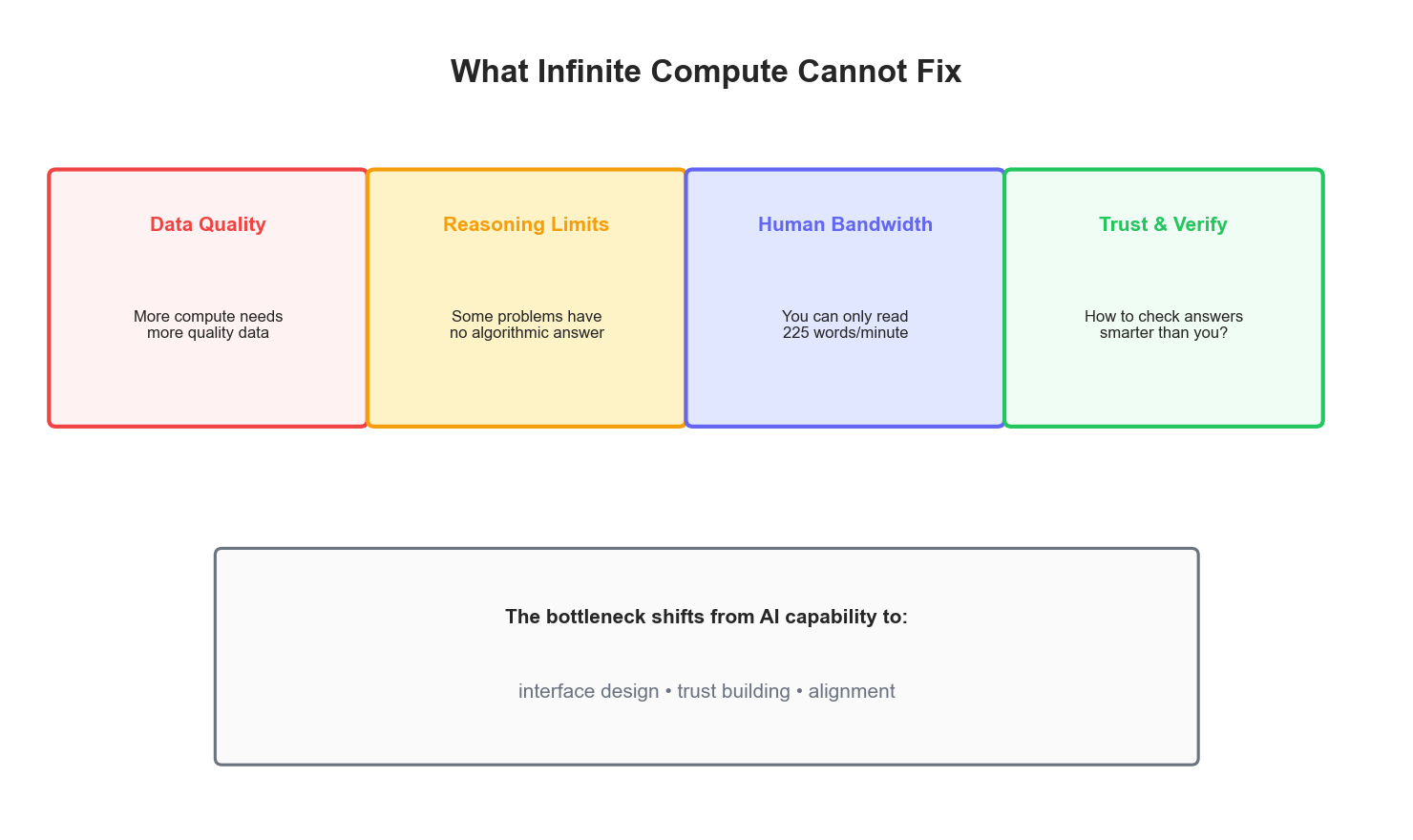

What Infinite Compute Cannot Fix 🔗

Here is the interesting part. Infinite compute does not solve everything:

Data quality: Chinchilla research showed models need about 20 words of training for each parameter. More compute without better data hits a wall. We see signs of this already.

Some problems have no answer: Gödel showed that some math problems cannot be solved by any algorithm. More compute does not help with the impossible.

You can only read so fast: Even with infinite compute, humans take in words at about 225 per minute. The AI could make a million insights per second, but you cannot absorb them.

Trust is hard: How do you check an answer from something smarter than you? This is a human problem, not a compute problem.

Where We Are Heading 🔗

We will not wake up to infinite compute tomorrow. But the path is clear:

- More thinking time: Models like o3 and Opus 4.5 reasoning get better

- Bigger memory: Context windows grow from 200K to 2M to 10M tokens

- Smooth multimodal: Text, voice, image blend together

- Proactive help: AI starts offering help before you ask

- Deep personalization: As we share more data, AI understands us better

Researchers estimate compute could grow 1000 times by 2030. Not infinite, but enough to change everything.

The question is not if these futures come. The question is how we shape them when they do.

Read More 🔗

Recent Research (2025) 🔗

- Scaling Test-Time Compute with Latent Reasoning - A 3.5B model that reasons in latent space can match a 50B model by thinking longer

- Thinking-Optimal Scaling of Test-Time Compute - Too much thinking can hurt performance; there is an optimal length for each task

- InfiniteICL: Breaking Context Window Limits - Cuts context length by 90% while keeping 103% of performance

- Inference Scaling Laws - Smaller models with smart inference beat bigger models at lower cost

- YETI: Proactive Multimodal AI Agents - AI that knows when to step in and help without being asked

- Scaling Laws for Scalable Oversight - How weaker systems can watch over stronger ones

- Superalignment Survey - The road to keeping superintelligent AI safe

Foundational Papers 🔗

- Scaling Laws for Neural Language Models - OpenAI’s base research

- The Scaling Hypothesis - Deep analysis by Gwern

- Beyond Chinchilla-Optimal - New thinking on scaling

- Infini-attention - Google’s work on infinite context transformers

- Planning for AGI and Beyond - OpenAI’s vision

- Superintelligence - Bostrom’s book on the topic